SIDKIT

What if agents could write directly to hardware? SIDKIT pairs LLM agents with a Rust toolchain — one designs sound, one generates code, one compiles and flashes. Same Teensy becomes synth, sequencer, or game console via prompt.

The Thesis

Everyone will be vibe coding. SIDKIT extends it into the physical world — a living, reconfigurable instrument designed for agent collaboration. Users move from Text → Code → Firmware → Sound without leaving the browser.

The Hard Problem

How to build AI native hardware from ground up allowing agents to code games, synthesis modules and sequencers with just voice or text prompt. There is no device that allows that atm. What is the optimal interface for no-coders or beginners? How to learn with AI? How to teach articulating complex tasks and features.

Solution: SIDKIT thanks to its component and design systems allows flashing firmware directly from prompts. It previews interactive devices before committing flashes using WASM. WASM version mirrors hardware — hear it before flashing. Digital twin ensures what you hear is what you get. That allows faster iteration and abstracts coding away from the users. Errors and solutions are saved in the database allowing agents to find common problems.

The Category: Text to Hardware

Same Teensy, different device. Via prompt it becomes:

- •A wavetable synthesiser

- •A Pac-Man melody generator

- •A 4-track step sequencer

- •A hybrid engine (physical modelling + SID chip emulation)

- •A Zelda-style adventure with procedural audio

You're not generating firmware — you're generating devices.

Triple-Agent Architecture

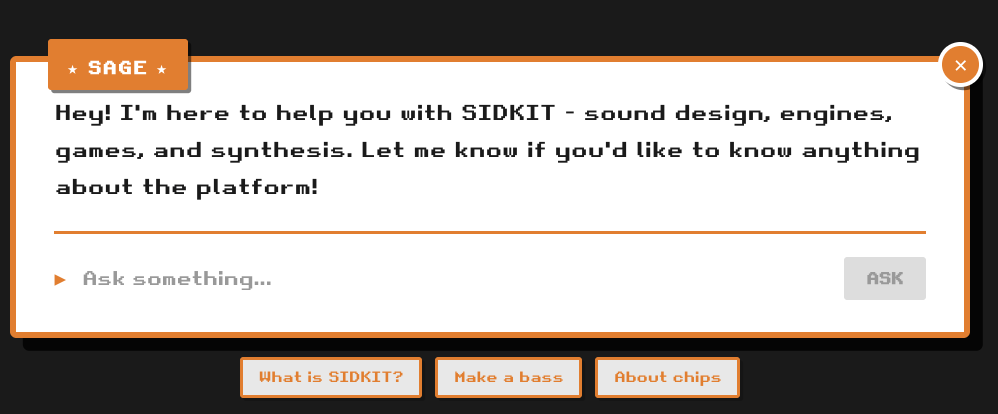

| Agent | Function | LLM | Stack |

|---|---|---|---|

| SAGE | Platform guide, sound design, SysEx generation | Claude Haiku | MCP Tools |

| Builder | C++ code generation, module creation | Claude Sonnet/Opus | Code Gen |

| Tool Server | Compile (arm-gcc) + Flash (teensy_loader) | No | Rust + Axum |

System Flow

Pipeline

Text

Natural Language

Code

C++ Generated

Firmware

Compiled Binary

Hardware

Teensy 4.1

Design Decisions

- —Semantic Knowledge Base: 80+ JSON documents — chips, synthesis methods, games, SDK patterns. Cloudflare AI embeddings, cached in Vectorise.

- —MCP Tools (SAGE): 9 specialised tools — get_platform_spec, get_engine_specs, search_knowledge, control_synth, etc.

- —Dual Design Systems: Two parallel component libraries — firmware OLED (C++/U8g2) and WebUI (React/TypeScript).

- —Error Learning: SQLite-based pattern storage. Successful fixes are stored — next time that error appears, the agent recalls the solution.

Hardware Platform

| MCU | Teensy 4.1 (ARM Cortex-M7 @ 600MHz) |

| Display | 256x128 4-bit greyscale OLED |

| Controls | 8 encoders + 24 buttons |

| Audio | 2x stereo + USB Audio (48kHz) |

| Architecture | 4 engine slots, 1x sequencer slot 1x game slot, any sound engine in any slot |

Games as Sequencers

Games aren't just sound effects — they're melody generators. Every action triggers musical events that can be recorded and looped.

Pac-Man meets Orca. Player navigates maze, falling notes hit character, creating melodies. Gameplay becomes composition.

Lemmings-inspired rhythm pathfinder. AI creatures walk toward exits that trigger drums. Manipulate obstacles to create rhythmic variations.

Probability-driven step sequencer with sprite animations. Raindrops fall with weighted randomness — each hit triggers notes. Visual weather patterns become generative compositions.

Game of Life meets step sequencer. Cellular automata and L-systems determine step probability, velocity, and timing. Grid patterns and step swaps become generative compositions. Sprite animations are triggered by tracks and steps.

Agent-to-Hardware Protocol (A2HW)

- 1.Preview Layer — WASM synth mirrors hardware

- 2.SysEx Control — Real-time changes without reflashing

- 3.Error Learning — Pattern matching from successful fixes

- 4.Digital Twin — WebUI and hardware UI stay in sync

This isn't another synth with an AI assistant. It's a platform for generating devices.

Links

Stack

Next.js 15 • React • TypeScript • WASM (reSID) • Rust • Axum • C++ • PlatformIO • Cloudflare Workers/D1/Vectorise